User Software and Computing

Computing Environment Setup: Batch System Advanced Topics

This page contains description of advanced topics for users of the condor batch system and presumes the user already knows the basics of how to configure condor batch jobs (or crab) at the cmslpc user access facility, documented on the main batch system web page.

How to submit multicore jobs on the cmslpc nodes

Any additional job requirements will restrict which nodes your job can run on. Consider carefully any requirements/requests in a condor jdl given to you from other lpc users.- Add to your condor multicore.jdl file (the file you submit for instance

condor submit multicore.jdl):request_cpus = 4 - Note that most worker nodes have no more than 8 cores. If you require more cores, you can request them, you may be waiting for those fewer machines to become available. See here for information to troubleshoot condor batch jobs.

- See the July 21, 2017 LPC Computing Discussion meeting slides and notes about how to profile your jobs in terms of memory and CPU usage

- From this Computing Tools hypernews discussion, add the following to your Pset.py:

from Validation.Performance.TimeMemorySummary import customiseWithTimeMemorySummary process = customiseWithTimeMemorySummary(process)

- Also see (see dynamic(partitionable) slots below to understand how the cmslpc condor slots work. Do not request significantly more (or less) cpus or memory than your job needs so that those resources are available for other users.

- Don't forget to configure your jobs to use the same number of cores you requested, for instance in

cmsRunMulticore.py:#Setup FWK for multithreaded process.options.numberOfThreads=cms.untracked.uint32(4) process.options.numberOfStreams=cms.untracked.uint32(0) - CMSSW references on multi-threading:

How do I request more memory for my batch jobs?

Any additional job requirements will restrict which nodes your job can run on. Consider carefully any requirements/requests in a condor jdl given to you from other lpc users.- The requirements here specify a machine with at

least 2100 megabytes of memory available (default slot size).

request_memory = 2100

- Use the memory requirement only if you do need it, keeping in mind that you may wait a significant amount of time for slots to be free with sufficient memory available.

- It will also negatively affect your condor priority and combine the default job slots together (see dynamic(partitionable) slots below).

How do I request more local disk space for my batch jobs?

Any additional job requirements will restrict which nodes your job can run on. Consider carefully any requirements/requests in a condor jdl given to you from other lpc users. If your job needs more than 40GB space then you will have to split up your job processing into multiple jobs. Keep in mind that most filesystems (EOS included) perform best for individual files of size of 1-5GB apiece.- A setting documented here in the past included

request_disk = 1000000(KiB), which restricts to nodes with disk of 1.024 GB or more. As of March 3, 2017, adding the disk requirement of 1GB will remove 1767 CPU from running your job. Increasing these numbers will restrict the number of machines you can run on (completely if too high). - In default partitioning, users can use up to 40GB disk per 1core, 2GB memory slot

- Additionally, due to slot partitioning (see dynamic(partitionable) slots below), requesting large disk will reduce the resources available for other users.

- Be sure to only request disk you need, up to 40GB, the best is to not request disk space at all.

For a complete and long list of possible requirement settings, see the condor user's manual.

An explanation of the cmslpc dynamic(partitionable) condor slots

For a longer explanation and discussion of cmslpc dynamic(partitionable) slots, see the August 4, 2017 LPC Computing Discussion slides and minutes

- cmslpc worker nodes have anywhere from 8 to 24 "CPU" (actually cores) available. Each core has ~2GB memory each, and 40GB disk space for a job.

- The job slots are created when a condor job asks for them

- The default job slot is 1CPU, 2GB memory, and 40GB disk space

- A job slot can take more CPU, and/or memory, but NOT more disk space, 40GB is the limit

- Examples: to see how a worker node is partitioned, we look at a 8 core machine with 16GB RAM. We will ignore disk space and presume it's default.

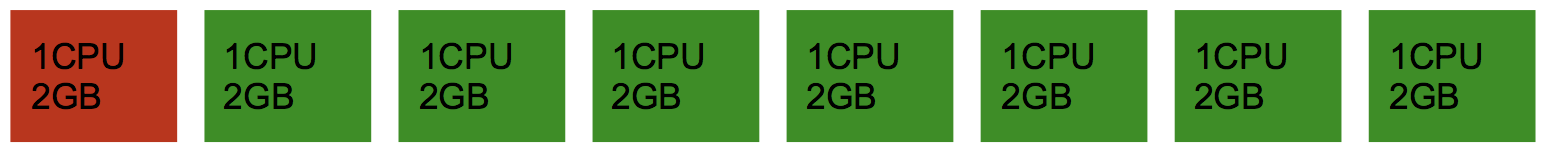

- Default job of 1 CPU, 2GB memory, running job in red, unclaimed, not yet existing, slots in green:

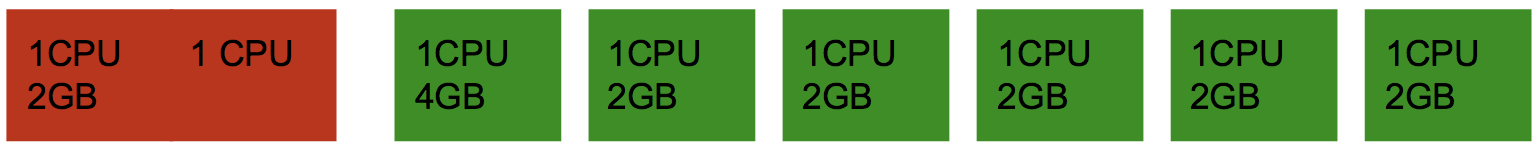

request_cpus = 2, default 2GB memory, running job in red, unclaimed, not yet existing, slots in green:

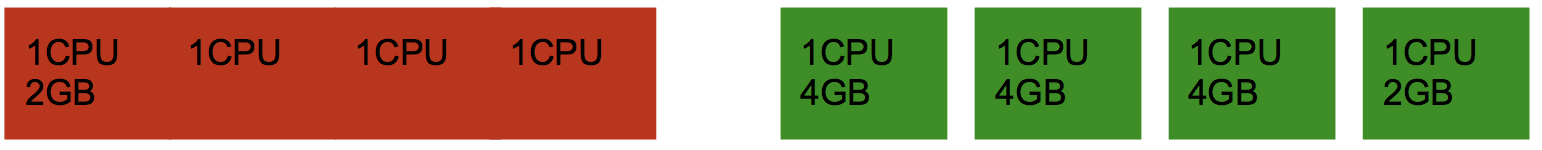

request_cpus = 4, default 2GB memory, running job in red, unclaimed, not yet existing, slots in green:

- To know how many default slots are available,

condor_statuswill not tell you as slots only exist as they are made (cmslpc jobs only have access to cmswn machines, and not all slots listed). Instead, go to CMS LPC System Status Landscape monitoring, the red line in "Slots" tells the maximum default slots available. - Note that when you do

condor_status, the "slot1@cmswn" for each machine will never get claimed, as that's the slot that creates the others, such as "slot1_1", "slot1_21", etc.

How is condor configured on the cmslpc nodes? Useful for troubleshooting

Please see also the main condor troubleshooting instructions.

To find out how a certain variable is configured in condor by default on the cmslpc nodes, use the condor_config_val -name lpcschedd1.fnal.gov

command. The name of a scheduler is needed as they handle the job negotiation, and all three are configured the same.

condor_config_val -dump -name lpcschedd1.fnal.gov

: this dumps all the condor configuration variables

that are set by default. To understand more about these variables, consult the Condor user's manual.

Other condor topics

Condor user's manual

For more information, visit the Condor user's manual.

Find the version of condor running on lpc with condor_q -version

About compilation of code for cmslpc condor

One important note: compilation of code is not supported on the remote worker node. This includes

ROOT's ACLiC (i.e. the plus signs at the end of

root -b -q foo.C+). You can compile on the LPC interactive nodes and

transfer the executables and shared libraries for use on the worker. For root -b -q foo.C+, you will need to transfer the recently compiled foo* to the condor job.